If you initialized a

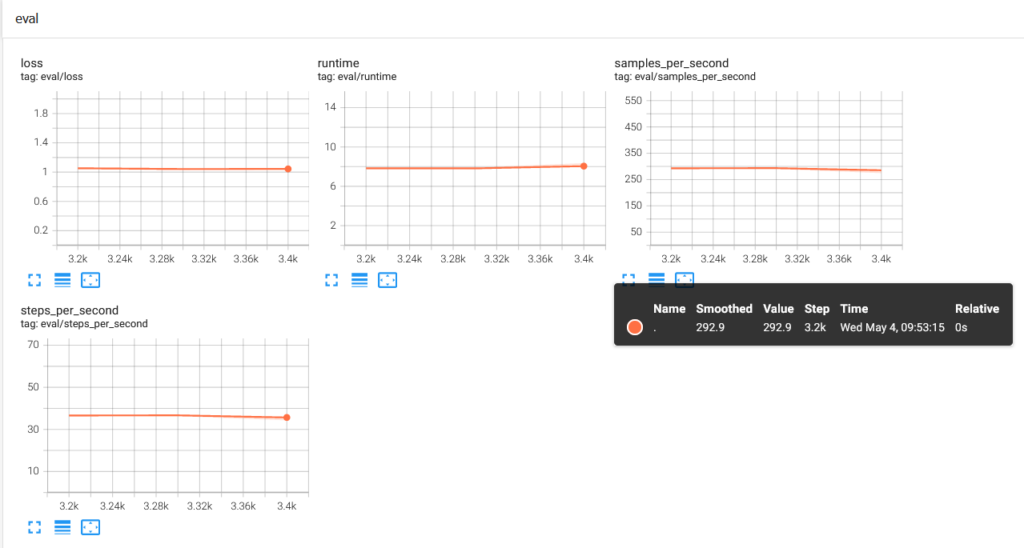

One important argument is the evaluation_strategy which is set to “no” by default, thus no evaluation is done while training. You can set it up either per steps (using eval_steps) or at the end of each epoch. Make sure to set up an evaluation dataset beforehand.

# example snippet

# define hyperparameters

training_args = TrainingArguments(

[..]

evaluation_strategy="steps",

eval_steps=100,

)

# create TensorBoard Writer and assigns it to a Callback object

writer = SummaryWriter("path/to/tensorboardlogs")

tbcallback = TensorBoardCallback(writer)

# defines model

trainer = Trainer(

[..]

args=training_args

train_dataset=train,

eval_dataset=eval

callbacks=[tbcallback]

)

trainer.train()

We also set a TensorboardCallback, so that metrics are directly send to Tensorboard after each evaluation.

Further Readings:

Fine-tuning a model with the Trainer API, access under: https://huggingface.co/course/chapter3/3?fw=pt